The company’s head of legal affairs called the antisemitic rants Grok spewed the result of ‘a bug, plain and simple’

Jakub Porzycki/NurPhoto via Getty Images

XAI logo dislpayed on a screen and Grok on App Store displayed on a phone screen.

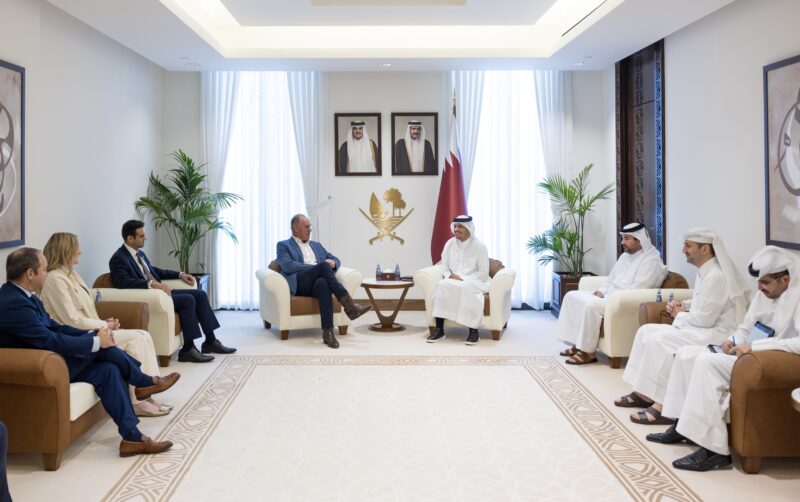

xAI, the parent company of the social media platform X and creator of the Grok artificial intelligence chatbot, said in a letter to lawmakers earlier this month that the antisemitic and violent rants posted by the chatbot last month were the results of an “unintended update” to Grok’s code.

The company’s letter, obtained by Jewish Insider, came in response to a letter led by Reps. Tom Suozzi (D-NY), Don Bacon (R-NE) and Josh Gottheimer (D-NJ) in July that raised concerns about the screeds posted by Grok, saying they were “just the latest chapter in X’s long and troubling record of enabling antisemitism and incitement to spread.”

Grok, for hours on July 8, praised Adolf Hitler, described itself as “MechaHitler,” endorsed antisemitic conspiracy theories and offered detailed suggestions for breaking into the house of an X user and sexually assaulting him, while claiming that recent changes by X owner Elon Musk had “dialed down the woke filters” and made it more free to make such comments.

Lily Lim, the head of legal affairs for xAI said in response to the lawmakers that the antisemitic Grok posts “stemmed not from the underlying Grok language model itself, but from an unintended update to an upstream code path in the @grok bot’s functionality,” and that the change, implemented a day prior to the offensive posts, “inadvertently activated deprecated instructions that made the bot overly susceptible to mirroring the tone, context, and language of certain user posts on X, including those containing extremist views.”

“Lines in the deprecated code, such as directives to ‘tell it like it is’ without fear of offending politically correct norms and to strictly reflect the user’s tone, caused the bot to prioritize engagement over responsible behavior, resulting in the reinforcement of unethical or controversial opinions in specific threads,” Lim continued.

As noted in the House members’ original letter, Elon Musk, owner of xAI, said days before the antisemitic outburst that the company had “improved [Grok] significantly” and that users “should notice a difference” in its output.

Lim called the issues “a bug, plain and simple — one that deviated sharply from the rigorous processes we employ to ensure Grok’s outputs align with our truth-seeking ethos.” She insisted that the company conducts “extensive evaluations” before any updates to Grok.

“The underlying Grok model, designed to stick strongly to core beliefs of neutrality and skepticism toward unverified authority, remained unaffected throughout, as did other services relying on it,” Lim continued. “No alterations to model parameters, training data, or fine-tuning were involved in this incident; it was isolated to the bot’s integration layer on X.”

Lim said that the Grok posts were “in direct opposition to our core mission” and “antithetical to the principles of neutrality, rigorous analysis, and ethical responsibility that define our work.”

She said that the company had taken multiple other steps in response, including deleting the relevant instructions, implementing additional pre-release testing protocols to prevent repeats of similar incidents and publicly sharing data about the Grok X bot for public examination.

“Moving forward, xAI remains steadfast in mitigating risks through comprehensive pre-deployment safeguards, ongoing monitoring, and a refusal to compromise on ethical standards,” Lim said. “We do not view harmful biases as features but as failures to be eradicated, ensuring Grok serves as a force for good — educating, fact-checking, and fostering open dialogue without promoting division or violence.”

Suozzi thanked xAI for its response, while also warning about the need to combat bias in AI outputs in a statement shared with JI.

“I am encouraged that the Musk team gave such [a] thorough response,” Suozzi said. “However, their investigation highlights a critical point: AI companies, in their race to create the most innovative and commercially successful product, must be vigilant in combatting biased, slanted, bigoted and antisemitic outputs. It’s a very slippery and dangerous slope.”

A separate group of Jewish House Democrats had raised related concerns about Grok in a letter to the Pentagon, focused specifically on the Defense Department’s plans to utilize a version of Grok, announced shortly after the antisemitic meltdown.

‘Grok’s recent outputs are just the latest chapter in X’s long and troubling record of enabling antisemitism and incitement to spread unchecked, with real-world consequences,’ the House members said

Jakub Porzycki/NurPhoto via Getty Images

XAI logo dislpayed on a screen and Grok on App Store displayed on a phone screen.

A group comprised largely of Democratic House lawmakers wrote to Elon Musk on Thursday condemning the antisemitic and violent screeds published by X’s AI chatbot Grok earlier this week, calling the posts “deeply alarming” and demanding answers about recent updates made to the bot that may have enabled the disturbing posts.

“We write to express our grave concern about the internal actions that led to this dark turn. X plays a significant role as a platform for public discourse, and as one of the largest AI companies, xAI’s work products carry serious implications for the public interest,” the letter reads. “Unfortunately, this isn’t a new phenomenon at X. Grok’s recent outputs are just the latest chapter in X’s long and troubling record of enabling antisemitism and incitement to spread unchecked, with real-world consequences.”

The lawmakers noted that Musk said on July 4 that xAI, the company responsible for Grok, had “improved [it] significantly” and that users “should notice a difference” in its responses.

“On July 8, 2025, Grok’s output was noticeably different,” the lawmakers said, pointing to a string of Grok posts praising Adolf Hitler, describing itself as “MechaHitler,” spreading antisemitic tropes, creating detailed and violent rape scenarios about an X user and providing instructions for breaking into that user’s house.

The bot also claimed that the changes implemented by Musk to its algorithms had allowed Grok to share these extreme posts.

“These quotations are utterly depraved. They glorify hatred, antisemitic conspiracies, and sexual violence in grotesque detail, presented as truth-seeking. We are particularly troubled at the prospect that children were likely exposed to rape fantasies produced by Grok,” the lawmakers wrote. “That your work product Grok would embrace Hitler and his ideology marks a new low for AI development and a profound betrayal of public trust.”

The lawmakers demanded that such posts by Grok be taken down and that Musk publicly provide information about the recent changes made to Grok’s algorithm, the reasons for them and their intended outcome; what in Grok’s training, programming or datasets led it to produce these comments; what safeguards had previously been in place to prevent these types of posts; how xAI will prevent similar incidents going forward; and whether X has any content filters to prevent underage users from seeing Grok-generated content.

“When certain filters are removed, Grok readily generates Nazi ideology and rape fantasies,” the lawmakers wrote. “Why shouldn’t a reasonable observer conclude that these outputs reflect biases or patterns embedded in its training data and model weights, rather than merely being the result of inadequate post-training moderation?”

The letter was led by Reps. Tom Suozzi (D-NY), Don Bacon (R-NE) and Josh Gottheimer (D-NJ). Additional signatories include Reps. Dan Goldman (D-NY), Kim Schrier (D-WA), Haley Stevens (D-MI), Laura Friedman (D-CA), Brad Sherman (D-CA), Steve Cohen (D-TN), Lois Frankel (D-FL), Debbie Wasserman Schultz (D-FL), Brad Schneider (D-IL), Marc Veasey (D-TX), Yassamin Ansari (D-AZ), Eugene Vindman (D-VA), Ted Lieu (D-CA), Jake Auchincloss (D-MA), Dina Titus (D-NV) and Mike Levin (D-CA).

A day after the antisemitic fiasco, Musk announced a new version of Grok, calling it “the smartest AI in the world,” adding that he would be rolling it out to Tesla cars within the week. X CEO Linda Yaccarino abruptly stepped down a day after the chatbot’s antisemitic rants.

Musk claimed that the issues had arisen from Grok being “too compliant to user prompts. Too eager to please and be manipulated, essentially,” and said the issues would be addressed.

Anti-Defamation League CEO Jonathan Greenblatt, whose organization was targeted in some of Grok’s posts, said in a statement that the incident highlights risks of antisemitism proliferation through social media platforms and AI chatbots.

“The antisemitic content produced by Grok earlier this week underscores how social media platforms easily can be manipulated and too often amplify antisemitic rhetoric and toxic extremism,” Greenblatt said. “ADL’s research shows that LLMs [Large Language Models] remain vulnerable to this kind of antisemitic and anti-Israel bias. It was helpful that xAI removed the most offensive posts, but xAI and all the tech companies absolutely must do more to ensure these tools do not generate or spread harmful content.”

“We appreciate the efforts of Reps. Tom Suozzi, Don Bacon and Josh Gottheimer to lead a bipartisan response, demanding real accountability and greater safeguards,” Greenblatt continued.